Top Gear (1992) AI

In this project, we aim to create an Artificial Intelligence agent that can play Top Gear (1992), a once popular SNES game. Our goal was for our AI to function well enough to beat human players in the game.

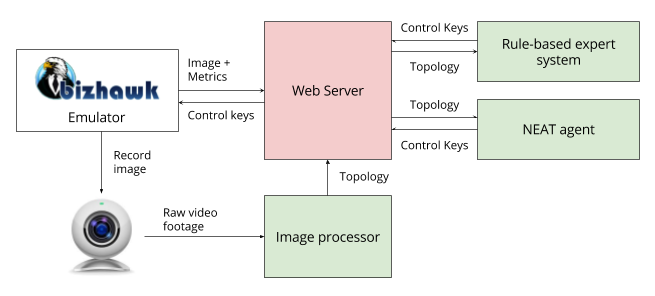

Data Interfacing Architecture

BizHawk was the emulator that we used to actually run the Top Gear ROM. It provides many nice feature, including the ability to separate different layers of graphics so that we can turn on only the graphic parts that we actually need. The entire program was also open-sourced, so we have a chance to actually temper with the source code so that it can interact with other elements written in Python.

We follow a microservice pattern in which there is a centralize Python server. All other elements will merely send update requests, thus keeping things modular and easy to build. We set a bottleneck in BizHawk so that BizHawk only send new information if it already receives some action to execute. If it doesn’t, the game will temporary freeze. This turns out to be quite beneficial because the performance of the agent will not depend on the stability of the connection.

Training the agent with Neural Network

We use NEAT-python, which is a neural network library that trains a neural network using evolutionary process. Compared to traditional backpropagation approach, this training method has several advantage:

- Backpropagation uses a lot of processing power to change the weights of the connection, while neuro-evolution simply uses random weight mutation and, thus, saves a lot of time for the training process.

- Backpropagation needs a “labelled” dataset to actually train. In the case of a game like Top Gear, it is very unclear whether an action is right or wrong so constructing a labelled set is very inconvenient. NEAT can train without a labelled set and, thus, solve the problem.

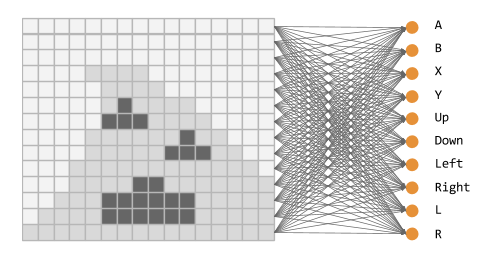

Our Neural Network architecture is fairly simple as above. We first convert the image input into a 14 x 16 grid that simplifies the situation into “Road” or “Not Road.” We then feed that input directly into each of the 10 outputs representing 10 action buttons in the SNES controller.

Initially, there will be 20 neural networks randomly generated. They will be tested to see which one is the “fittest,” meaning they do the best in the race. After each batch of test, 10 worst performing networks will be removed, while the remaining 10 will perform mutation and cross over to produce new 10 networks. Because we keep the original 10, the new generation will be at least as good as the previous generation. The new 20 networks will then be tested for “survival of the fittest” and the process repeats. For more information, you can take a look at this paper Evolving Neural Networks through Augmenting Topologies by Kenneth O. Stanley and Risto Miikkulainen

In the first generation, the neural network knows absolutely nothing and makes very dumb decisions.

In the third generation, it has learnt to go straight ahead since longer distance means being more “fit.” However, it still struggles to turn left to keep itself on the road.

In the fifth generation, It has now learnt to turn, but it keeps unnecessarily bumping into other cars.

By generation 10, however, the neural network seems to achieve its maximum potential. The agent skilfully surpass other cars with ease and even learns to use Nitro boost when the road is straight and clear.

Testing the network

Aside from some struggle to recognize car in front of it (partly because of the way our translation work), the car handles the race very well. We tried to test it by racing with actual people and it wins against human players seven out of ten times and finishes first in the race three out of ten times! We are very proud of our project and will surely plan to extend it further in the future!

Our project is currently open-sourced at: https://github.com/AIDreamer/TopGearNEATAgent